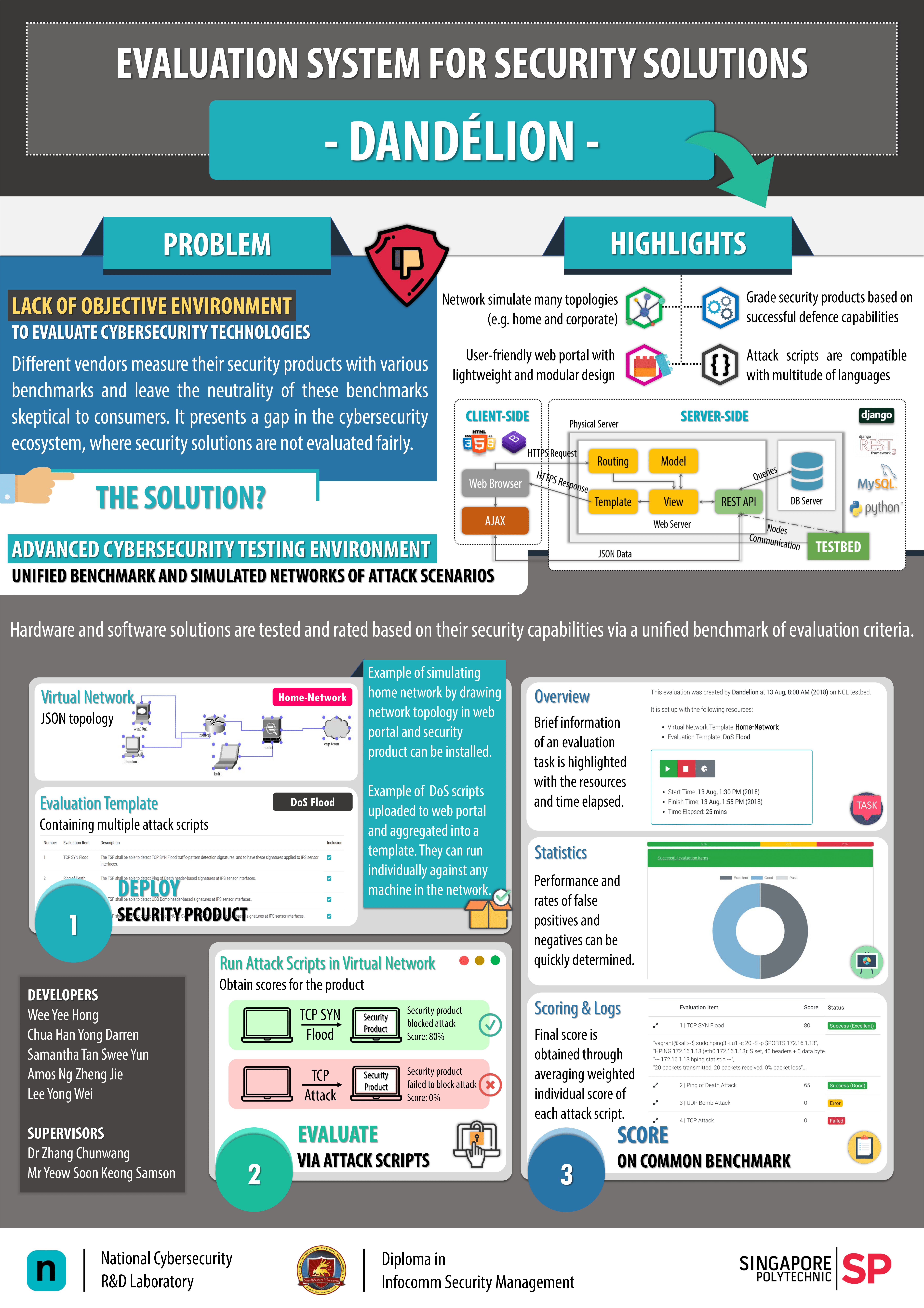

I was appointed as the vice team leader for my final year project in Singapore Polytechnic from April 2018 - August 2018, where my team and I were tasked with developing an “Evaluation System for Security Solutions (Dandélion)” for our client; the National Cybersecurity R&D Laboratory (NCL) at NUS. As vice team leader, I had to ensure smooth functioning of the team and delegate each member’s responsibilities according to the project requirements.

Background

An adequate sense of trust and confidence is paramount to any system of sellers and buyers, of which the cybersecurity ecosystem is no exception. Consumers should have confidence in the cybersecurity technology solutions they are acquiring and solution providers should be assured that their superior solutions will be able to be distinguished from the rest. However, there has been a lack of an objective evaluation environment for cybersecurity technologies as different vendors tend to measure their product using different benchmarks, leaving the neutrality of these benchmarks sceptical to buyers.

Goal

The team developed a proof-of-concept cybersecurity technology testing environment that is based on a unified benchmark for evaluating, validating and scoring of new security technologies and solutions. There are one or more simulated networks with various attack scenarios and can be used to test technologies in sense-making and threats monitoring.

Some modules included in Dandélion:

- A simulated base environment with basic networking elements such as servers, PCs, switches, routers and firewalls

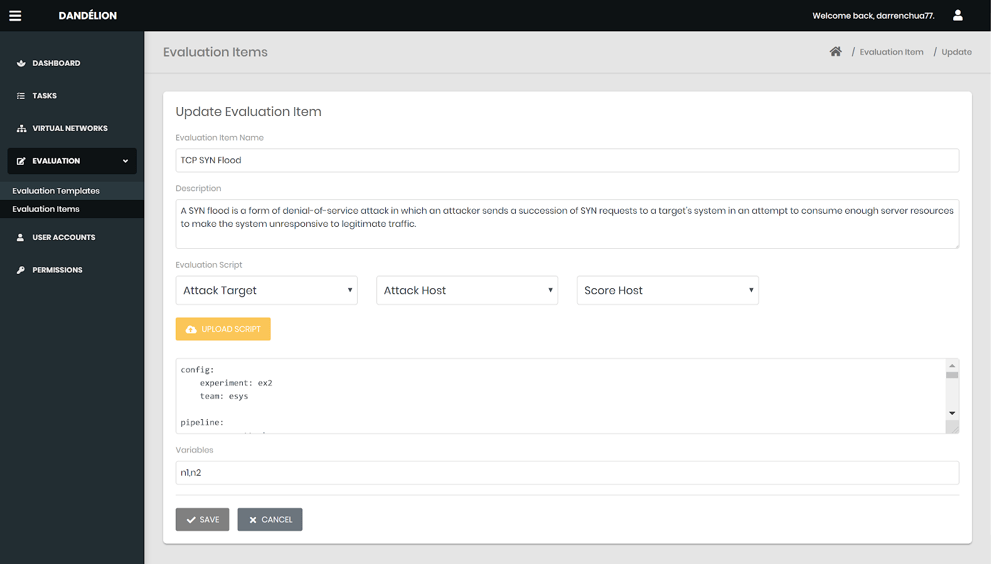

- An attack traffic generator for various attack scenarios and techniques

- An instrumentation framework for automatic scoring of security solutions

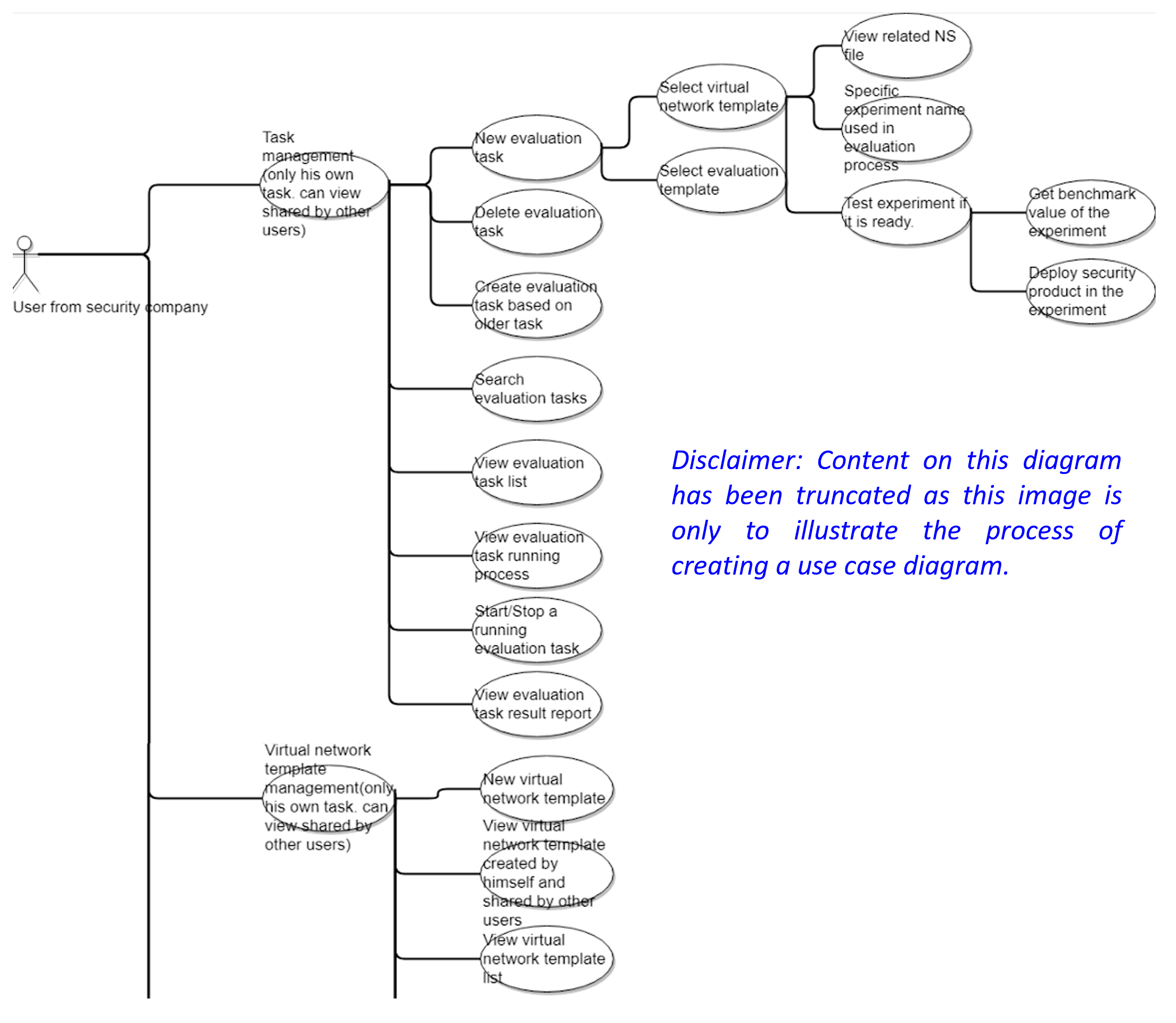

Requirement Analysis

Our project was based upon the Scrum Project Management Framework, in which we delivered our work in short cycles known as Sprints. We began with identifying the application requirements from user stories and a product backlog to tag each desired requirement to a priority level. This was accompanied by a use case diagram that consolidates the high-level functions and scope of the evaluation system.

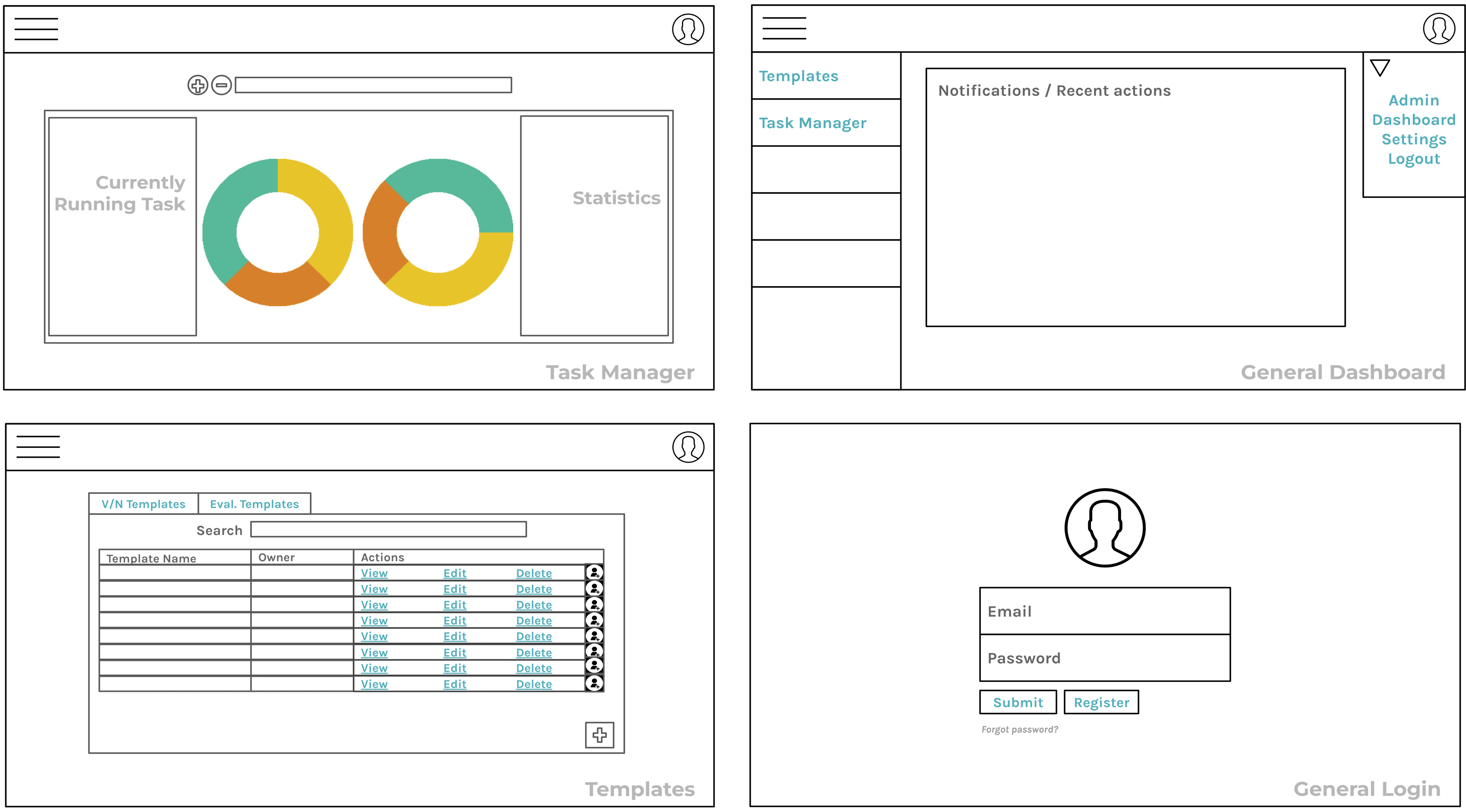

Following that, we created wireframe UI prototypes together with our client to simulate how the eventual web application should look like. Apart from discussing about the frontend, we also had to consider how the backend testbed environment and servers would interact with our frontend web portal, i.e. how to pack our attack scripts into evaluation templates and use them on our virtual nodes with ease.

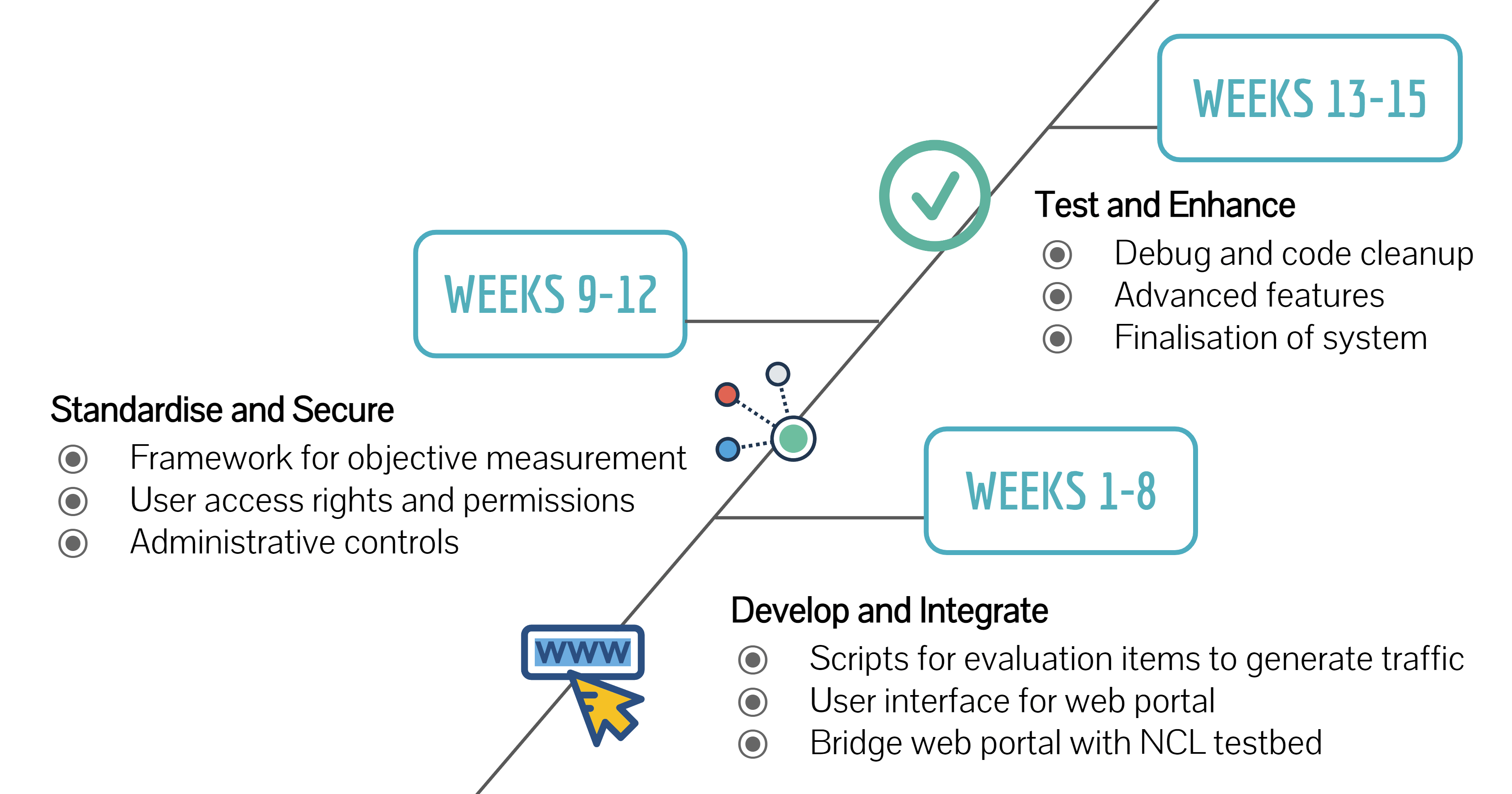

The formalization of these related requirements took four weeks in the beginning. The toughest part was to agree on how the task evaluation report would look like in the evaluation system, though I am unable to reveal detailed information about the project in this public writeup. Upon formalizing the requirements over an initial period of four weeks, the team then had a remaining eleven weeks to work on the actual product and testing. Below timeline is an estimate of milestones that happened over the entire course of fifteen weeks.

Product Development

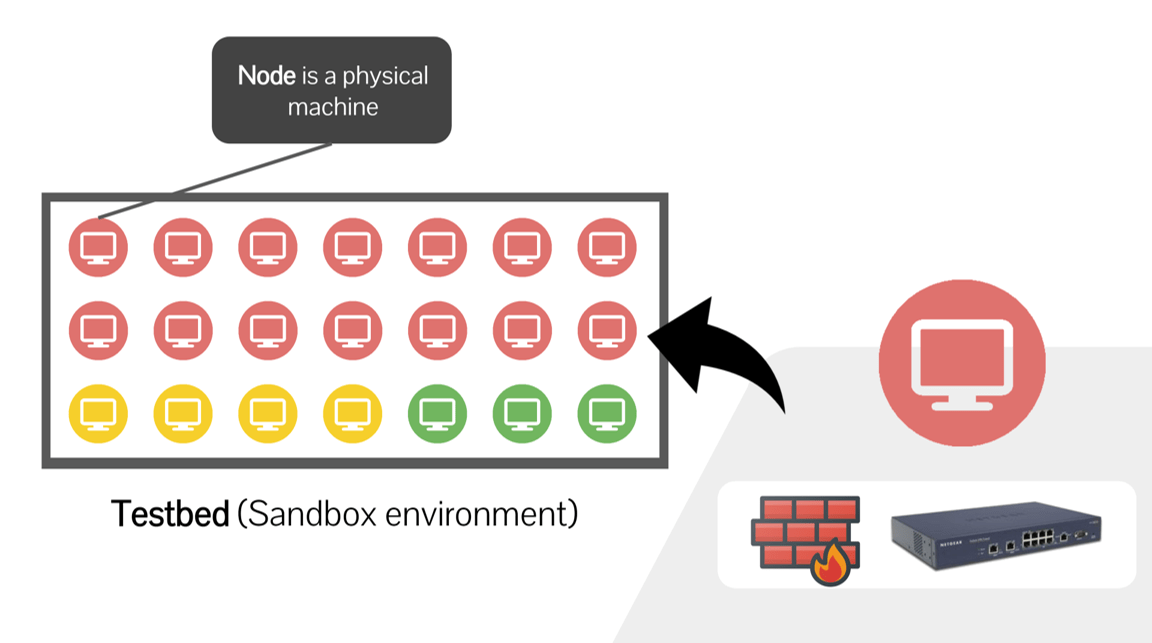

The conception and realisation of an evaluation system was done in collaboration with experts from NCL. NCL is a national shared infrastructure launched in February 2017 by the National University of Singapore. They provide repeatable and controllable experimentation environments, in addition to computing resources for cybersecurity research. Whereas the team worked on the development of the evaluation system, NCL provided a testbed that served as the basis for conducting experiments on cybersecurity solutions.

The evaluation system would use the nodes to set up virtual networks with different topologies and virtual machines with different operating systems as the testing environment. Attack scripts could be run from a virtual machine in one node against virtual machines in other nodes while the cybersecurity solution was installed into the network to determine whether the solution had sufficient defensive capabilities to detect or prevent these attacks.

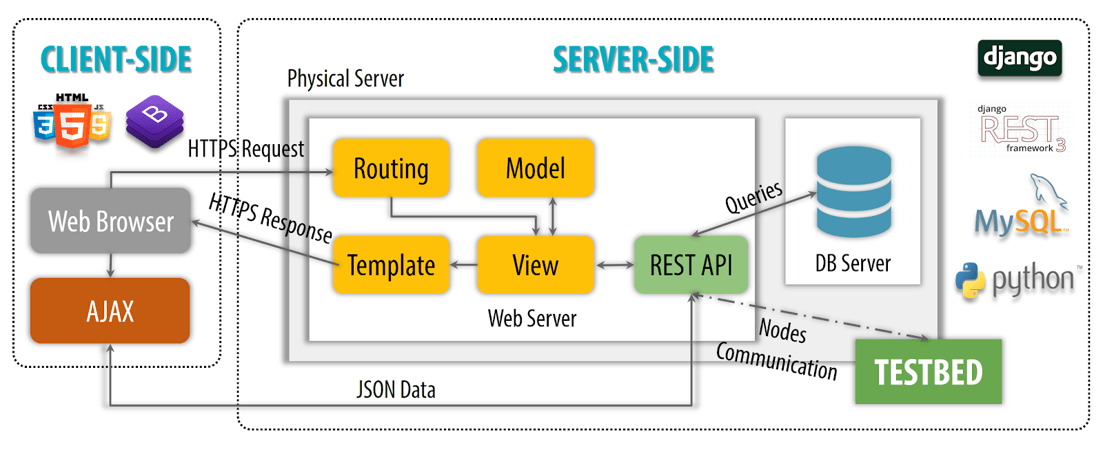

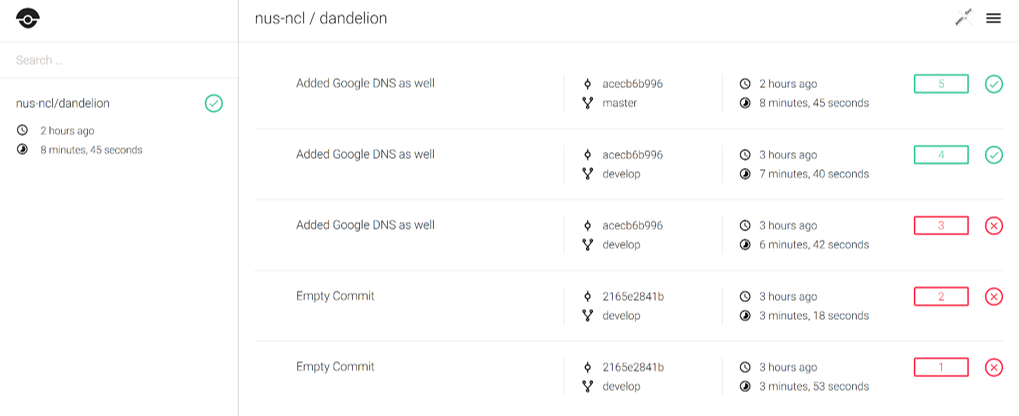

An interactive web interface for the evaluation system was developed using a combination of development tools such as Docker, Python3, Django, REST API framework, AJAX, Javascript, MySQL, HTML5, CSS3, Bootstrap 4 and YAML for the configuration of attack scripts, setting up of virtual environment and to create and manage evaluation tasks. During development, we used GitHub and Drone CI to facilitate code maintenance and code integration.

This called for the adoption of a Continuous Delivery software development approach, where the code development and unit and integration tests will be done in the development environment, before being moved to the staging environment for security and usability tests. Once everything was ready, we then pushed the changes to the production environment.

Utilizing Scrum

As part of Scrum, our members were assigned roles including product owner, user experience lead, scrum master, technical lead and R&D executive. The scrum master played an important role in the project, as she had to manage the process for how information was exchanged using the scrum methodology. For example, we had to do a daily stand up meeting to share our progress on what we have worked on, what are we going to work on and what are the issues faced by each member.

Despite so, our team felt that it would be better to do weekly stand-ups as daily stand-ups incurred an administrative overhead onto our time, on top of our hours in our school curriculum for other subjects in our semester. However, a useful part of Scrum was that we had to conduct a monthly sprint review. During which, we would demonstrate the features to our client and provide updates on the features that we have implemented for the evaluation system.

These reviews were great opportunities to work on areas of improvements and generate feedback for one another to improve for the betterment of the project. Aside, we also tracked our individual progress regularly using the burndown and work-time availability charts.

Outcome

The evaluation system seeked to bridge the trust gap between vendors and consumers by allowing vendors to measure their solutions using the same benchmark and platform and thus enabling them to accurately convey the functionalities of their security solutions so that consumers will be able to easily trust the vendors’ solution for their use case.

The web portal was made user-friendly with a simple URL structure that does not exceed three levels. Links on the navigation bar will lead to another page, allowing the user to view more information. Moreover, as the navigation bar is on every page, users will find it very easy to return to the main pages.

Due to the confidentiality of the project, detailed information cannot be disclosed on this public writeup. Aside, my team was honoured to showcase this project at the Singapore International Cyber Week, GovWare Conference 2018 and Singapore Polytechnic Open House 2019. The success of this Proof-of-Concept Evaluation System would not have been possible without the support from our supervisors and mentors at Singapore Polytechnic and the National Cybersecurity R&D Laboratory.

Check out video of the promotion of our project here: